kubespray를 이용한 kubernetes 클러스터 배포

릴리스 히스토리

쿠버네티스 프로젝트는 가장 최신의 3개 마이너(minor) 릴리스(1.25, 1.24, 1.23)에 대해서 릴리스 브랜치를 관리한다. 쿠버네티스 1.19 및 이후 신규 버전은 약 1년간 패치 지원을 받을 수 있다. 쿠버네티스 1.18 및 이전 버전은 약 9개월간의 패치 지원을 받을 수 있다.

쿠버네티스 버전은 x.y.z 의 형태로 표현되는데, x 는 메이저(major) 버전, y 는 마이너(minor), z 는 패치(patch) 버전을 의미하며, 이는 시맨틱(semantic) 버전의 용어를 따른 것이다.

- https://kubernetes.io/ko/releases/

버전 차이 정책(Version Skew Policy)

- https://kubernetes.io/releases/version-skew-policy/

배포 도구로 쿠버네티스 설치하기

https://kubernetes.io/ko/docs/setup/production-environment/tools/

Kubernetes The Hard Way - https://github.com/kelseyhightower/kubernetes-the-hard-way

kubeadm - https://github.com/kubernetes/kubeadm

kubespary(kubeadm + ansible) - https://github.com/kubespray

minikube - https://github.com/kubernetes/minikube, https://minikube.sigs.k8s.io/docs/start/

kops - https://github.com/kubernetes/kops

테스트 환경

$ cat /proc/cpuinfo | egrep 'model name' | head -n1

model name : Intel(R) Xeon(R) CPU E5-2690 v4 @ 2.60GHz

$ free -h

total used free shared buff/cache available

Mem: 27Gi 10Gi 6.9Gi 13Mi 9.8Gi 16Gi

Swap: 2.0Gi 1.0Mi 2.0Gi

$ df -h | egrep -v 'tmpfs|loop'

Filesystem Size Used Avail Use% Mounted on

udev 14G 0 14G 0% /dev

/dev/vda5 196G 24G 163G 13% /

/dev/vda1 511M 4.0K 511M 1% /boot/efi서버 구성 현황

| 호스트명 | IP | 역할 | CPU | Memory | 비고 |

| kube-control1 | 192.168.56.11 | Control Plane | 4 | 4096 | |

| kube-node1 | 192.168.56.21 | Node | 2 | 4096 | |

| kube-node2 | 192.168.56.22 | Node | 2 | 4096 | |

| kube-node3 | 192.168.56.23 | Node | 2 | 4096 |

사전 작업

1. swap 메모리 비활성화

swapoff -ased -i 's/.*swap.*/#&/' /etc/fstab2. ip forward 설정 및 확인

echo 1 > /proc/sys/net/ipv4/ip_forward$ cat /proc/sys/net/ipv4/ip_forward

1cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOFsysctl --systemkubespray 설치

Control Plane 작업

ssh 키 생성 및 복사(계정 정보 : vagrant / vagrant)

ssh-keygen -f ~/.ssh/id_rsa -N ''$ ssh-keygen -f ~/.ssh/id_rsa -N ''

Generating public/private rsa key pair.

Your identification has been saved in /home/vagrant/.ssh/id_rsa

Your public key has been saved in /home/vagrant/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:6wHWOUrODYmeMAZJLNJk2FnOJd0R0ueiY+tkFBpBk8s vagrant@kube-control1

The key's randomart image is:

+---[RSA 3072]----+

|.*o+*ooooo |

|=++o.=..o . |

|= .+.. o |

| . E+ +... |

| + o B.S. |

| . + B+= o |

| o.*o+ |

| o.. . |

| .. . |

+----[SHA256]-----+ssh-copy-id vagrant@192.168.56.11ssh-copy-id vagrant@192.168.56.21$ ssh-copy-id vagrant@192.168.56.21

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/vagrant/.ssh/id_rsa.pub"

The authenticity of host '192.168.56.21 (192.168.56.21)' can't be established.

ECDSA key fingerprint is SHA256:QLk+YEc2ZU1qNye9PcvqL9B33bemsEU1VSmycNLmx6s.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

vagrant@192.168.56.21's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'vagrant@192.168.56.21'"

and check to make sure that only the key(s) you wanted were added.ssh-copy-id vagrant@192.168.56.22ssh-copy-id vagrant@192.168.56.23sudo timedatectl set-timezone Asia/Seoulpython3, pip, git 설치

sudo apt updatesudo apt install -y python3 python3-pip git vimkubespray 설치

cd ~kubespray git 저장소 클론(최신 릴리즈)

git clone --single-branch --branch v2.19.1 https://github.com/kubernetes-sigs/kubespray.gitcd kubespray의존성 확인 및 설치

$ cat requirements.txt

ansible==5.7.1

ansible-core==2.12.5

cryptography==3.4.8

jinja2==2.11.3

netaddr==0.7.19

pbr==5.4.4

jmespath==0.9.5

ruamel.yaml==0.16.10

ruamel.yaml.clib==0.2.6

MarkupSafe==1.1.1sudo pip3 install -r requirements.txt$ sudo pip3 install -r requirements.txt

Collecting ansible==5.7.1

Downloading ansible-5.7.1.tar.gz (35.7 MB)

|████████████████████████████████| 35.7 MB 1.6 kB/s

Collecting ansible-core==2.12.5

Downloading ansible-core-2.12.5.tar.gz (7.8 MB)

|████████████████████████████████| 7.8 MB 13 kB/s

...인벤토리 생성(sample 사용)

cp -rfp inventory/sample inventory/myclusterinventory.ini 편집

vim inventory/mycluster/inventory.ini[all]

kube-control1 ansible_host=192.168.56.11 ip=192.168.56.11

kube-node1 ansible_host=192.168.56.21 ip=192.168.56.21

kube-node2 ansible_host=192.168.56.22 ip=192.168.56.22

kube-node3 ansible_host=192.168.56.23 ip=192.168.56.23

[kube_control_plane]

kube-control1

[etcd]

kube-control1

[kube_node]

kube-node1

kube-node2

kube-node3

[calico_rr]

[k8s_cluster:children]

kube_control_plane

kube_node

calico_rraddons.yml 편집(파라미터 확인 및 변경)

vim inventory/mycluster/group_vars/k8s_cluster/addons.ymlmetrics_server_enabled: true

ingress_nginx_enabled: true

metallb_enabled: true

metallb_ip_range:

- "192.168.56.200-192.168.56.209"

metallb_protocol: "layer2"

k8s-cluster.yml 편집(파라미터 확인 및 변경)

vim inventory/mycluster/group_vars/k8s_cluster/k8s-cluster.ymlkube_proxy_strict_arp: true

container_manager: docker

ansible 통신 확인

ansible all -i inventory/mycluster/inventory.ini -m ping$ ansible all -i inventory/mycluster/inventory.ini -m ping

[WARNING]: Skipping callback plugin 'ara_default', unable to load

kube-node3 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

kube-node2 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

kube-node1 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

kube-control1 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}apt 캐시 업데이트(모든 노드)

ansible all -i inventory/mycluster/inventory.ini -m apt -a 'update_cache=yes' --become$ ansible all -i inventory/mycluster/inventory.ini -m apt -a 'update_cache=yes' --become

[WARNING]: Skipping callback plugin 'ara_default', unable to load

kube-control1 | CHANGED => {

"cache_update_time": 1663594656,

"cache_updated": true,

"changed": true

}

kube-node3 | CHANGED => {

"cache_update_time": 1663594662,

"cache_updated": true,

"changed": true

}

kube-node1 | CHANGED => {

"cache_update_time": 1663594663,

"cache_updated": true,

"changed": true

}

kube-node2 | CHANGED => {

"cache_update_time": 1663594662,

"cache_updated": true,

"changed": true

}timezone 설정 및 time, timezone 확인

ansible all -i inventory/mycluster/inventory.ini -a 'sudo timedatectl set-timezone Asia/Seoul' --become$ ansible all -i inventory/mycluster/inventory.ini -a 'sudo timedatectl set-timezone Asia/Seoul' --become

[WARNING]: Skipping callback plugin 'ara_default', unable to load

kube-node1 | CHANGED | rc=0 >>

kube-node3 | CHANGED | rc=0 >>

kube-node2 | CHANGED | rc=0 >>

kube-control1 | CHANGED | rc=0 >>ansible all -i inventory/mycluster/inventory.ini -a 'date'$ ansible all -i inventory/mycluster/inventory.ini -a 'date'

[WARNING]: Skipping callback plugin 'ara_default', unable to load

kube-control1 | CHANGED | rc=0 >>

Mon Sep 19 22:43:04 KST 2022

kube-node3 | CHANGED | rc=0 >>

Mon Sep 19 22:43:04 KST 2022

kube-node2 | CHANGED | rc=0 >>

Mon Sep 19 22:43:04 KST 2022

kube-node1 | CHANGED | rc=0 >>

Mon Sep 19 22:43:04 KST 2022ansible all -i inventory/mycluster/inventory.ini -a 'timedatectl'$ ansible all -i inventory/mycluster/inventory.ini -a 'timedatectl'

[WARNING]: Skipping callback plugin 'ara_default', unable to load

kube-node2 | CHANGED | rc=0 >>

Local time: Mon 2022-09-19 22:44:55 KST

Universal time: Mon 2022-09-19 13:44:55 UTC

RTC time: Mon 2022-09-19 13:44:53

Time zone: Asia/Seoul (KST, +0900)

System clock synchronized: no

NTP service: inactive

RTC in local TZ: no

kube-node3 | CHANGED | rc=0 >>

Local time: Mon 2022-09-19 22:44:55 KST

Universal time: Mon 2022-09-19 13:44:55 UTC

RTC time: Mon 2022-09-19 13:44:53

Time zone: Asia/Seoul (KST, +0900)

System clock synchronized: no

NTP service: inactive

RTC in local TZ: no

kube-node1 | CHANGED | rc=0 >>

Local time: Mon 2022-09-19 22:44:55 KST

Universal time: Mon 2022-09-19 13:44:55 UTC

RTC time: Mon 2022-09-19 13:44:54

Time zone: Asia/Seoul (KST, +0900)

System clock synchronized: no

NTP service: inactive

RTC in local TZ: no

kube-control1 | CHANGED | rc=0 >>

Local time: Mon 2022-09-19 22:44:55 KST

Universal time: Mon 2022-09-19 13:44:55 UTC

RTC time: Mon 2022-09-19 13:44:53

Time zone: Asia/Seoul (KST, +0900)

System clock synchronized: no

NTP service: inactive

RTC in local TZ: noansible 플레이북 실행(kubernetes v1.22.10 설치)

ansible-playbook -i inventory/mycluster/inventory.ini cluster.yml -e kube_version=v1.22.10 --become자격증명 가져오기

mkdir ~/.kubesudo cp /etc/kubernetes/admin.conf ~/.kube/configsudo chown $USER:$USER ~/.kube/configkubectl 명령 자동 완성

kubectl completion bash | sudo tee /etc/bash_completion.d/kubectlecho 'source <(kubectl completion bash)' >>~/.bashrcexec bashkubernetes 클러스터 확인

kubectl get nodes$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

kube-control1 Ready control-plane,master 70m v1.22.10

kube-node1 Ready <none> 67m v1.22.10

kube-node2 Ready <none> 67m v1.22.10

kube-node3 Ready <none> 67m v1.22.10kubectl api-versions$ kubectl api-versions

admissionregistration.k8s.io/v1

apiextensions.k8s.io/v1

apiregistration.k8s.io/v1

apps/v1

authentication.k8s.io/v1

authorization.k8s.io/v1

autoscaling/v1

autoscaling/v2beta1

autoscaling/v2beta2

batch/v1

batch/v1beta1

certificates.k8s.io/v1

coordination.k8s.io/v1

crd.projectcalico.org/v1

discovery.k8s.io/v1

discovery.k8s.io/v1beta1

events.k8s.io/v1

events.k8s.io/v1beta1

flowcontrol.apiserver.k8s.io/v1beta1

metrics.k8s.io/v1beta1

networking.k8s.io/v1

node.k8s.io/v1

node.k8s.io/v1beta1

policy/v1

policy/v1beta1

rbac.authorization.k8s.io/v1

scheduling.k8s.io/v1

storage.k8s.io/v1

storage.k8s.io/v1beta1

v1kubectl get pods -A

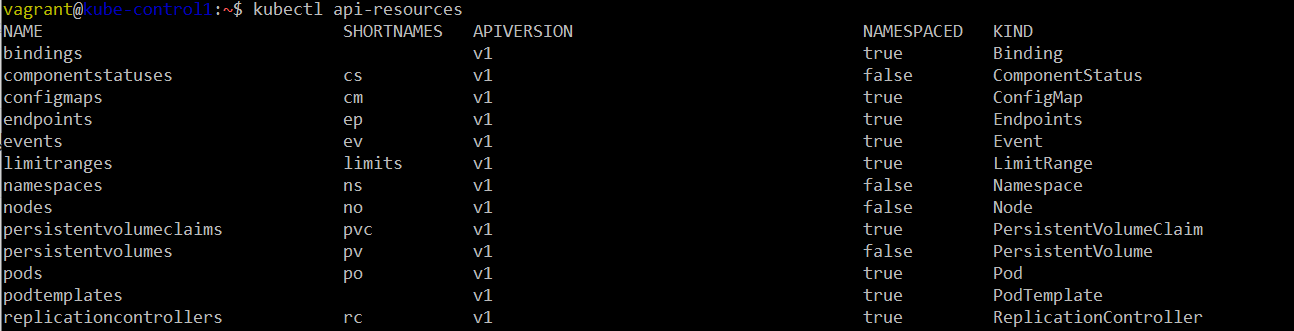

kubectl api-resources

참고URL

- [리눅스] vagrant 패키지 설치 및 ubuntu 배포 : https://sangchul.kr/406

- kubectl 자동 완성 활성화 : https://kubernetes.io/docs/tasks/tools/included/optional-kubectl-configs-bash-linux

'리눅스' 카테고리의 다른 글

| Vagrant를 사용하여 Ubuntu 가상 머신을 설치하고 배포하는 방법 (0) | 2022.09.19 |

|---|---|

| [리눅스] Ubuntu 20.04에 VirtualBox를 설치하는 방법 (0) | 2022.09.19 |

| macOS에 Packer를 설치하는 방법 (0) | 2022.08.27 |

| [iac][terraform] terraform 명령어 (0) | 2022.08.26 |

| [iac][ansible] 동적 인벤토리(aws_es2 plugin) (0) | 2022.08.26 |